<!DOCTYPE html>

<html>

<head>

<title>Urban Sound Tagging Project</title>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8"/>

<link rel="stylesheet" href="./assets/katex.min.css">

<link rel="stylesheet" type="text/css" href="./assets/slides.css">

<link rel="stylesheet" type="text/css" href="./assets/grid.css">

</head>

<body>

<textarea id="source">

class: center, middle

# Urban Sound Tagging Project

<br/><br/>

.bold[Simon Leglaive]

<br/><br/>

.tiny[CentraleSupélec]

---

class: middle, center

# Introduction

---

class: middle, center

# You + deep learning = ❤️

.vspace[

]

.alert-g[

You spent more than 10 hours learning the basics of deep learning, including PyTorch practice on toy examples.

Now its time to solve a real-world problem!

]

---

class: center, middle

<div style="text-align:center;margin-bottom:30px">

<iframe width="700" height="400" src="https://www.youtube.com/embed/d-JMtVLUSEg" frameborder="0" allow="autoplay; encrypted-media" style="max-width:100%" allowfullscreen="">

</iframe>

</div>

---

## Urban Sound Tagging

.small-vspace.left-column[<img src="http://d33wubrfki0l68.cloudfront.net/282c08f73c870b0d68e92024a0248ac73d051daa/91ec9/images/tasks/challenge2016/task4_overview.png" style="width: 470px;" />]

.right-column[Given a 10-second audio recording, predict the presence/absence of 8 urban sounds:

1. `engine`

2. `machinery-impact`

3. `non-machinery-impact`

4. `powered-saw`

5. `alert-signal`

6. `music`

7. `human-voice`

8. `dog`

]

.reset-column[

]

.small-nvspace[

]

This is a .bold[multi-label classification] problem.

---

## Machine listening

.alert-g[

Machine listening focuses on developing algorithms to **analyze, interpret and understand audio data**, including speech, music, and environmental sounds.

]

--

It involves techniques from **signal processing** and **machine learning** to solve various tasks in

- **Speech Processing**

Automatic speech recognition, speaker identification and recognition, speech enhancement, ...

- **Music information retrieval** (MIR)

Chord and melody recognition, music genre classification, music recommendation, ...

- **Bio-** and **eco-acoustics**

Animal call recognition, migration / bio-diversity / noise pollution monitoring, ...

---

class: middle, center

# Audio signal representation

---

class: middle

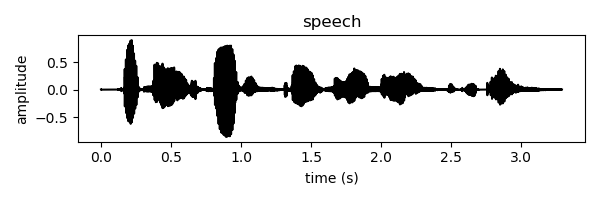

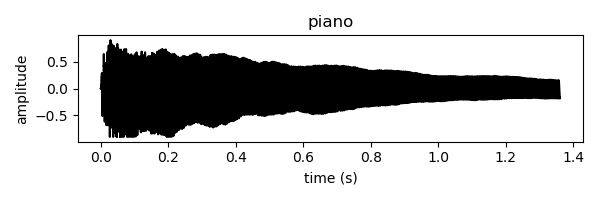

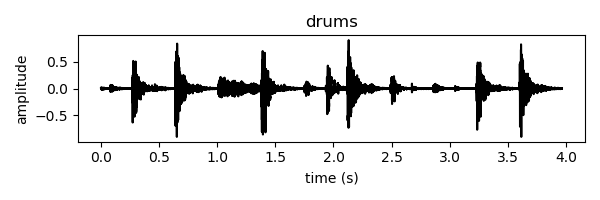

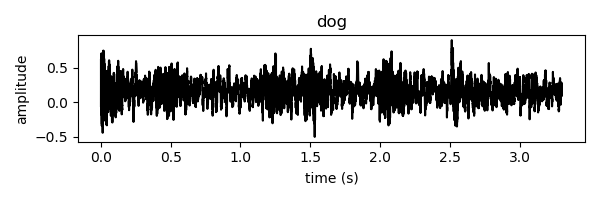

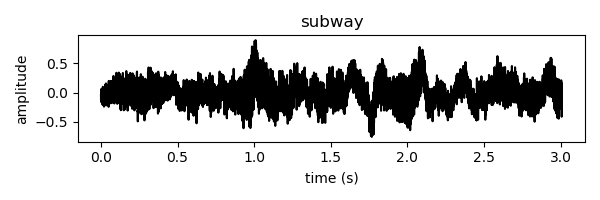

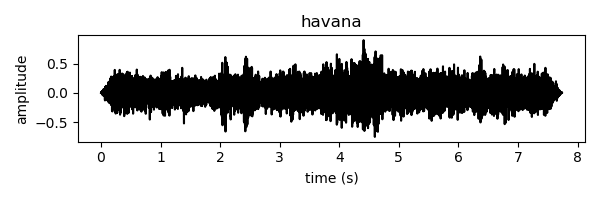

## Real-world sounds

Real-world sounds are complex, we need **representations** to highlight their characteristics.

.grid[

.kol-1-2[.width-80[]]

.kol-1-2[.width-80[]]

.kol-1-2[.width-80[]]

.kol-1-2[.width-80[]]

.kol-1-2[.width-80[]]

.kol-1-2[.width-80[]]

]

.center[Waveform representation of different sounds.]

---

class: middle

## Towards a “meaningful” representation

What are meaningful properties of an audio signal?

Let’s look at what musicians use to represent sounds: the musical score.

.center.width-80[]

A succession of “audio events” with indicators of **pitch**, **dynamics**, **tempo**, and **timbre**.

---

class: middle, center

Tempo and rhythm relate to **time** (measured in seconds).

Pitch and timbre relate to **frequency** (measured in Hertz).

Dynamics relates to **intensity** or **power** (measured in decibels).

.vspace[

]

.alert-g[Given the waveform of an audio signal, we would like to compute a representation

highlighting the characteristics of the signal along these three dimensions.

**Such a representation is given by the spectrogram**.

]

---

class: center, middle, black-slide

<iframe width="100%" height="100%" src="https://musiclab.chromeexperiments.com/Spectrogram/" frameborder="0" allowfullscreen></iframe>

---

class: middle

.alert-g[It is easier to **discriminate** between different sounds from their spectrogram representation than from their waveform.]

.center.width-30[]

.footnote[ .big[🧑🏫] .italic["From a pedagogical point of view, spectrograms are great for a deep learning project. <br>They can be (naively) see as images (good for CNNs) or sequential data (good for RNNs)."]]

---

class: middle, center

# Project organization

---

class: middle

## Agenda

- **You should work outside class hours**.

- 6 in-class sessions are scheduled to help you and to evaluate you.

- 3rd session: Deadline + Evaluation 📌

- 6th session: Deadline + Evaluation 📌

See Edunao for the complete agenda.

- For in-class sessions to be useful for you, prepare material (figures, tables, reports, questions, clean code, ...).

---

class: middle

## Resources

.alert-g[

<img src="images/gitlab.svg" style="width: 50px;" />

<br/>https://gitlab-research.centralesupelec.fr/sleglaive/urban-sound-tagging-project

]

- All resources and instructions (read them carefully!) are available on Gitlab.

- You are provided with a fully-functional baseline system.

- **Your task is to improve upon this baseline and propose a better urban sound tagging system** 🚀

---

class: middle

## Tools

- You must work with

.center[

<img src="images/pytorch.png" style="width: 150px;" />

<br/>https://pytorch.org/

]

- You will use

.center[

<img src="images/cs.jpeg" style="width: 70px;" />

<br/>https://mydocker.centralesupelec.fr/

]

to have access to computational ressources.

---

class: middle

## Evaluation

In brief:

- 2 intermediary deadlines and evaluated sessions

- 1 final technical report per group

- 1 final video per student

In details:

- See Edunao

---

class: middle

.alert[

The final performance of your system is not the objective and will not count for your evaluation.

You should target a thoughtful, rigorous, organized and justified approach. This is what really matters, not the final scores.

]

---

class: middle, center

# Now, hands on!

</textarea>

<script src="./assets/remark-latest.min.js"></script>

<script src="./assets/auto-render.min.js"></script>

<script src="./assets/katex.min.js"></script>

<script type="text/javascript">

function getParameterByName(name, url) {

if (!url) url = window.location.href;

name = name.replace(/[\[\]]/g, "\\$&");

var regex = new RegExp("[?&]" + name + "(=([^&#]*)|&|#|$)"),

results = regex.exec(url);

if (!results) return null;

if (!results[2]) return '';

return decodeURIComponent(results[2].replace(/\+/g, " "));

}

var options = {sourceUrl: getParameterByName("p"),

highlightLanguage: "python",

// highlightStyle: "tomorrow",

// highlightStyle: "default",

highlightStyle: "github",

// highlightStyle: "googlecode",

// highlightStyle: "zenburn",

highlightSpans: true,

highlightLines: true,

ratio: "16:9"};

var renderMath = function() {

renderMathInElement(document.body, {delimiters: [ // mind the order of delimiters(!?)

{left: "$$", right: "$$", display: true},

{left: "$", right: "$", display: false},

{left: "\\[", right: "\\]", display: true},

{left: "\\(", right: "\\)", display: false},

]});

}

var slideshow = remark.create(options, renderMath);

</script>

</body>

</html>